The EU AI Act: The Key Takeaways

The EU AI Act: The Key Takeaways

Definition of AI Systems

The initial definition of ‘artificial intelligence’ in the EU AI act focused on ‘software’. However, the final version narrowed down the definition, with the term now referring to systems “developed through machine learning approaches and logic and knowledge-based approaches.”In full, it states:

“AI system” - “a machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments”.

The AI Act also introduces harmonised rules for the placing on the market of General-Purpose AI Models (GPAIs). GPAIs are defined in the AI Act as AI systems intended by the provider to perform general functions like image or speech recognition across various contexts. In a scenario where GPAI models are part of other high-risk AI systems (which are defined below), they will fall under similar compliance requirements. Further guidance on compliance obligations for GPAI providers will be outlined in future implementing acts.

The following table provides a brief definition of the different actors recognised by the AI Act.

| ROLE | Definition |

|---|---|

| Deployer | A natural or legal person, public authority, agency, or other body using an AI system under its authority, excluding personal non-professional activities. |

| Importer | Any natural or legal person located or established in the EU that places on the market an AI system bearing the name or trademark of a natural or legal person established outside the EU. |

| Distributor | Any natural or legal person in the supply chain, other than the provider or importer, that makes an AI system available on the EU market. |

| Provider | A natural or legal person, public authority, agency, or other body that develops or has developed an AI system or general-purpose AI model, placing them on the market or putting them into service under its own name or trademark whether for payment or free of charge. |

| Operator | Includes the provider, product manufacturer, deployer, authorized representative, importer, or distributor. |

Scope of Application

The EU AI Act imposes obligations on providers, deployers, importers, distributors and product manufacturers of AI systems, connected to the EU market. The AI Act applies to:- Providers placing on the market or putting into service AI systems or placing on the market general-purpose AI models in the EU, irrespective of whether those providers are established or located in the EU or a third country

- Deployers of AI systems that have their place of establishment or are located in the EU

- Providers and deployers of AI systems that have their place of establishment or are in a third country, where the output produced by the AI system is used in the EU

- Importers and distributors of AI systems

- Product manufacturers placing on the market or putting into service an AI system together with their product and under their name or trademark

- Authorised representatives of providers, which are not established in the EU

- Affected persons who are in the EU.

- AI systems developed or used exclusively for military, defence, or national security purposes

- Public authorities in a third country and international organisations, where they use AI systems in the framework of international cooperation, agreements for law enforcement and judicial cooperation with the EU or with one or more EU member states. This is provided that such third countries or international organisations provide adequate safeguards concerning the protection of fundamental rights and freedoms of individuals.

- AI systems or AI models, including their output, specifically developed and put into service for the sole purpose of scientific research and development.

- Any research, testing or development activity regarding AI systems or models before being placed on the market or put into service. Such activities must be conducted by applicable EU law. Testing in real-world conditions is not covered by that exclusion.

- Natural persons using AI systems during purely personal non-professional activity.

The AI Act will also institute a fresh governance structure to supervise AI models. This involves establishing an AI Office in the European Commission, tasked with supervising advanced AI models, defining standards and ensuring uniform regulations across the EU member states.

Additionally, an independent Scientific Panel will advise the AI Office on GPAI models (general-purpose AI models). For more information on GPAI models, please refer to our article . The Scientific Panel will be formed by the experts selected by the European Commission based on up-to-date scientific or technical expertise in the field of AI. The panel will assist in developing evaluation methodologies, offer guidance on influential models, and oversee safety considerations.

The EU AI Act uses a risk-based approach. What does this mean?

The EU Commission crafted AI regulations employing a risk-based methodology, that includes various risk categories: unacceptable risk, high risk, and limited risk.Prohibited Practices

Unacceptable levels of risk correspond to prohibited AI practices. According to the AI Act, the following AI practices are prohibited:

- Covert influence (manipulating individuals subconsciously or through deceptive methods);

- Targeting (as long as it exploits a person's age, disability, or socioeconomic status);

- Social behaviour scoring;

- Predictive policing (profiling individuals to predict criminal behaviour, with specific exceptions);

- Real-time biometric surveillance in public spaces (with limited exceptions for law enforcement);

- Facial recognition databases;

- Deducing emotions (in settings such as workplaces and educational institutions, except in limited circumstances);

- Biometric categorisation (to infer characteristics like race, political stance, or sexual orientation, except for in limited circumstances).

Examples of when this may be strictly necessary include:

- Searching for victims of abduction, trafficking, or sexual exploitation and missing persons

- Preventing substantial and imminent threats to life, safety, or terrorist attacks

- Locating or identifying suspects for serious criminal investigations or prosecutions.

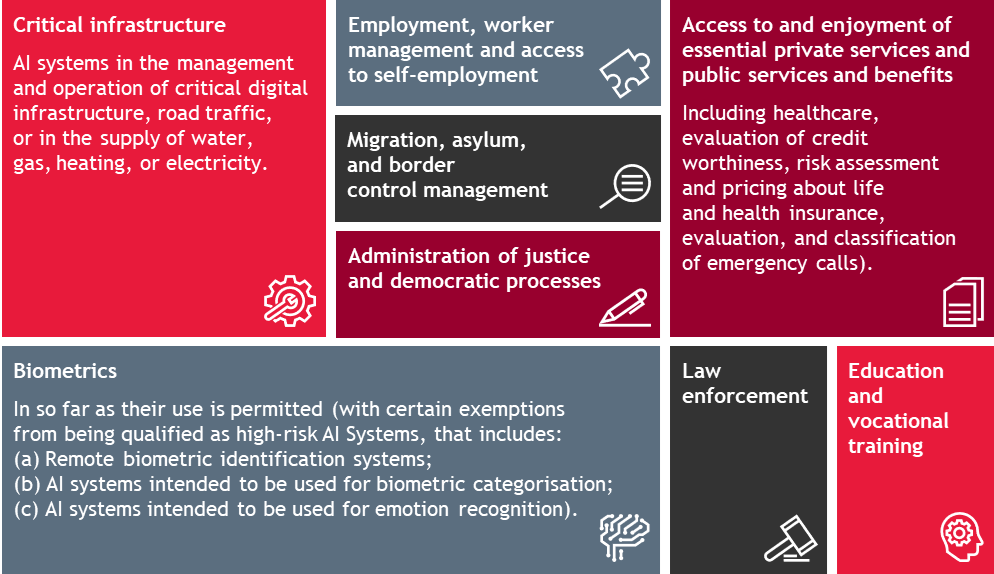

An AI system is considered high-risk if it is intended to be used as a safety component of a product or the AI system is itself a product. The AI system is required to undergo a third-party conformity assessment to be placed on the market. Annex III of the AI Act provides the list of high-risk AI systems: