Navigating the EU Artificial Intelligence (AI) Act: Implications and Strategies for UK Businesses

Navigating the EU Artificial Intelligence (AI) Act: Implications and Strategies for UK Businesses

In April 2021, the European Commission proposed the first-of-its-kind AI regulatory framework for the EU. The proposal, in the form of the draft AI Act, follows a risk-based approach: AI systems will be evaluated and categorised based on the level of risk they present to users, which will also determine the stringency of applicable regulatory requirements.

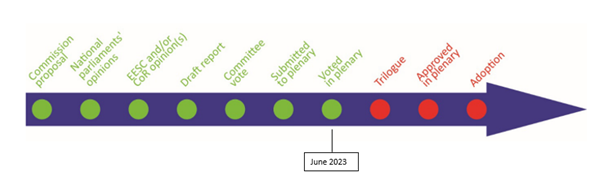

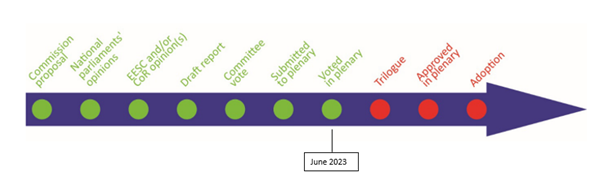

On 14 June 2023, the European Parliament adopted a much-awaited negotiating position on the draft AI Act. If accepted, it could substantially amend the European Commission’s initial proposal. The talks are now ongoing with EU countries in the Council on the final form of the law, the goal being to reach an agreement by the end of 2023 with a view to adopt the Act in early 2024.

Figure 1: The EU AI Act Implementation Timeline (Source: European Parliament)

The draft AI Act lays down a harmonised legal framework for the development, supply and use of AI products and services in the EU. The draft AI Act seeks to achieve a set of specific objectives:

If adopted, the AI Act will apply to:

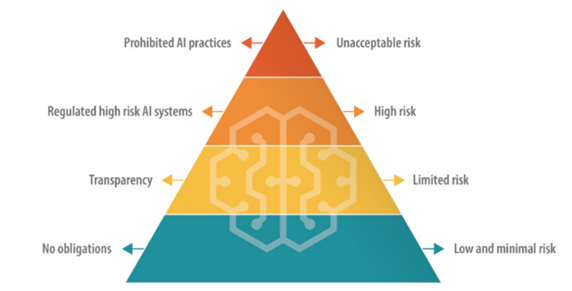

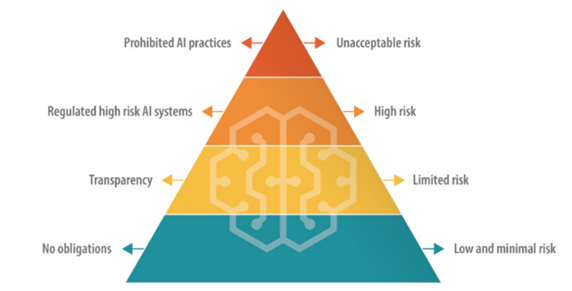

The EU Commission designed AI regulation using a risk-based approach, creating a risk pyramid of different risk statuses: unacceptable risk, high risk, limited risk, and low or minimal risk.

Figure 2: The EU AI Act 'Pyramid of Risks' (Source: European Parliament)

AI applications would be regulated only as strictly necessary to address specific levels of risk. More precisely, unacceptable, and high risks entail the following scenarios:

a) Unacceptable risk (Prohibited AI practices): Title II (Article 5) of the proposed AI act explicitly bans harmful AI practices that are considered to be a clear threat to people's safety, livelihoods, and rights, because of the “unacceptable risk” they create. Accordingly, it would be prohibited to place on the market, put into services or use in the EU:

The draft AI Act imposes specific obligations on the providers. For example, providers of high-risk AI systems would be required to register their systems in an EU-wide database managed by the EU Commission before they are placed on the market or put into service.

Moreover, high-risk AI systems would have to comply with a range of requirements particularly on risk management, testing, technical robustness, data training and data governance, transparency, human oversight, and cybersecurity (Articles 8 to 15).

Providers from outside the EU would be required to have an authorised representative in the EU, amongst others, to ensure the conformity assessment, establish a post-market monitoring system and take corrective action as needed.

5. Fines/Penalties

The draft AI Act provides for a varying scale of administrative fines, which depend on the severity of the infringement (with the maximum up to €30 million or 6% of the total worldwide annual turnover, whichever is higher, for the most serious infringements). EU member states would need to lay down more detailed rules on penalties, for example, whether and to what extent administrative fines may be imposed on public authorities.

However, the AI Act could have wider implications, having a ripple effect for UK organisations that operate on the UK market only. This is because, similar to the General Data Protection Regulation (GDPR), the AI Act is likely to set a global standard in this field. This could mean two things: first, UK companies that proactively embrace and comply with the AI Act can differentiate themselves on the UK market, attracting customers who prioritise ethical and accountable AI solutions; secondly, by virtue of being a “Golden Standard”, we may see the UK’s domestic regulations evolving in a way that seeks harmonisation with the AI Act.

So far, we have noted that the UK has already set out its own approach to AI governance in a white paper published in March 2023. In essence, the Government aims to regulate AI in a way that supports innovation while protecting people’s rights and interests. Some of the principles laid out in the white paper echo the EU’s stance on AI. For example, the Government intends to create a new regulatory framework for high-risk AI systems. It also plans to require companies to conduct risk assessments before using AI tools, which makes sense if the AI tool is processing personal information given that data protection by design and default are fundamental principles of the UK GDPR. However, the white paper states that these principles will not be enforced using legislation — at least, not initially. Therefore, it is yet unclear what the uptake will be for these principles, and to what degree their voluntary nature will affect wider adoption by organisations across the UK.

Further, the EU AI Act is a pivotal piece of legislation that encourages voluntary compliance, even for companies that may not initially fall within its scope (as highlighted in Article 69, which addresses Codes of Conduct). This means that UK companies, particularly those that not only offer AI services in the EU but also harness AI technologies to deliver their services within the region, are likely to be impacted by the Act. It's crucial to bear in mind that many UK businesses have a market reach extending far beyond the UK, making the EU AI Act particularly relevant to them.

Foundation models are a class of large-scale machine learning models that serve as the basis for building a wide range of AI applications. These models are pre-trained on vast amounts of data, enabling them to learn and understand complex patterns, relationships, and structures within the data. By fine-tuning foundation models on specific tasks or domains, developers can create AI systems that exhibit remarkable capabilities in areas such as natural language processing, computer vision, and decision-making. Examples of foundation models include OpenAI’s ChatGPT, Google’s BERT and PaLM-2, to mention a few. Due to their versatility and adaptability, foundation models have become a cornerstone in the development of advanced AI applications across various industries.

It is important to bear in mind the implications of the EU AI Act for companies currently developing apps using Generative AI Large Language Models (LLMs) and similar AI technologies, such as ChatGPT, Google Bard, Anthropic's Claude, and Microsoft's Bing Chat or 'Bing AI'. These companies should be aware of the potential impact of the Act on their operations and take necessary steps to ensure compliance, even if they are not directly targeted by the legislation. By doing so, they can stay ahead of the curve and maintain a strong presence in the ever-evolving AI landscape.

Companies using these AI tools and ‘Foundation models’ to deliver their services must consider and manage risks accordingly (Article 28b) and comply with transparency obligations set out in Article 52 (1).

The EU AI Act aims to set the standard in AI safety, ethics and Responsible AI use, and also mandates transparency, and accountability. The EU AI Act (as amended in June 2023) further sets out obligations in Article 52 (3) that address the use of Generative AI.

Specifically,

“Users of an AI system that generates or manipulates text, audio or visual content that would falsely appear to be authentic or truthful and which features depictions of people appearing to say or do things they did not say or do, without their consent (‘deep fake’), shall disclose in an appropriate, timely, clear and visible manner that the content has been artificially generated or manipulated, as well as, whenever possible, the name of the natural or legal person that generated or manipulated it. Disclosure shall mean labelling the content in a way that informs that the content is inauthentic and that is clearly visible for the recipient of that content. To label the content, users shall take into account the generally acknowledged state of the art and relevant harmonised standards and specifications.” – Amendment to Article 52 (3) - subparagraph 1.

The draft AI Act's provisions on AI testing also adhere to GDPR principles. Both emphasise accountability, requiring organisations to demonstrate compliance and maintain records. Substantial fines and penalties for non-compliance are shared consequences under these regulations, depending on the violation's severity. Together, they aim to ensure responsible and lawful AI development and data protection.

When navigating this ever-evolving compliance landscape, therefore, organisations will have to be aware of and continuously meet their obligations under the GDPR. For example, in the context of AI systems that involve solely automated processing and produce legal or similarly significant effects, organisations will have to demonstrate that they have put in place the right lawful basis and offer individuals the minimum required safeguards (such as the right to obtain human intervention) as well as meaningful transparency as to the processing activities involved.

If you have any queries or would like further information, please visit our data protection services section or contact Christopher Beveridge.

SUBSCRIBE: DATA PRIVACY INSIGHTS

On 14 June 2023, the European Parliament adopted a much-awaited negotiating position on the draft AI Act. If accepted, it could substantially amend the European Commission’s initial proposal. The talks are now ongoing with EU countries in the Council on the final form of the law, the goal being to reach an agreement by the end of 2023 with a view to adopt the Act in early 2024.

Figure 1: The EU AI Act Implementation Timeline (Source: European Parliament)

EU Artificial Intelligence (AI) Act

1. Overview and purposesThe draft AI Act lays down a harmonised legal framework for the development, supply and use of AI products and services in the EU. The draft AI Act seeks to achieve a set of specific objectives:

- Ensuring that AI systems placed on the EU market are safe and respect existing EU law;

- Ensuring legal certainty to facilitate investment and innovation in AI;

- Enhancing governance and effective enforcement of EU law on fundamental rights and safety requirements applicable to AI systems; and

- Facilitating the development of a single market for lawful, safe, and trustworthy AI applications and preventing market fragmentation.

If adopted, the AI Act will apply to:

- providers that put AI systems into service in the EU or place them on the EU market. It is of no relevance whether such providers are based in the EU or a third country;

- users of AI systems in the EU; and

- providers and users of AI systems that are located in a third country where the output produced by the system is used in the EU.

- AI systems developed or used exclusively for military purposes;

- public authorities in a third country;

- international organisations; and

- authorities using AI systems in the framework of international agreements for law enforcement and judicial cooperation.

The EU Commission designed AI regulation using a risk-based approach, creating a risk pyramid of different risk statuses: unacceptable risk, high risk, limited risk, and low or minimal risk.

Figure 2: The EU AI Act 'Pyramid of Risks' (Source: European Parliament)

AI applications would be regulated only as strictly necessary to address specific levels of risk. More precisely, unacceptable, and high risks entail the following scenarios:

a) Unacceptable risk (Prohibited AI practices): Title II (Article 5) of the proposed AI act explicitly bans harmful AI practices that are considered to be a clear threat to people's safety, livelihoods, and rights, because of the “unacceptable risk” they create. Accordingly, it would be prohibited to place on the market, put into services or use in the EU:

- AI systems that deploy harmful manipulative “subliminal techniques”;

- AI systems that exploit specific vulnerable groups (physical or mental disability);

- AI systems used by public authorities, or on their behalf, for social scoring purposes;

- “Real-time” remote biometric identification systems in publicly accessible spaces for law enforcement purposes, except in a limited number of cases.

- AI systems that are used in products falling under the EU’s product safety legislation. This includes toys, aviation, cars, medical devices, and lifts; and

- AI systems falling into eight specific areas that will have to be registered in an EU database:

- Biometric identification and categorisation of natural persons;

- Management and operation of critical infrastructure;

- Education and vocational training;

- Employment, worker management and access to self-employment;

- Access to and enjoyment of essential private services and public services and benefits;

- Law enforcement;

- Migration, asylum, and border control management;

- Administration of justice and democratic processes

The draft AI Act imposes specific obligations on the providers. For example, providers of high-risk AI systems would be required to register their systems in an EU-wide database managed by the EU Commission before they are placed on the market or put into service.

Moreover, high-risk AI systems would have to comply with a range of requirements particularly on risk management, testing, technical robustness, data training and data governance, transparency, human oversight, and cybersecurity (Articles 8 to 15).

Providers from outside the EU would be required to have an authorised representative in the EU, amongst others, to ensure the conformity assessment, establish a post-market monitoring system and take corrective action as needed.

5. Fines/Penalties

The draft AI Act provides for a varying scale of administrative fines, which depend on the severity of the infringement (with the maximum up to €30 million or 6% of the total worldwide annual turnover, whichever is higher, for the most serious infringements). EU member states would need to lay down more detailed rules on penalties, for example, whether and to what extent administrative fines may be imposed on public authorities.

The EU Parliament’s Proposed Amendments

EU Parliament adopted its negotiating position on 14 June 2023, proposing substantial amendments to the EU Commission's initial text. The most significant changes are likely to be:- Definition of AI: EU Parliament amended the definition of AI systems to align it with the definition agreed by The Organisation for Economic Co-operation and Development (OECD). Furthermore, EU Parliament enshrines a definition of EPRS | European Parliamentary Research Service 10 “general purpose AI system” and “foundation model” in EU law.

- Prohibited practices: EU Parliament substantially amended the list of AI systems prohibited in the EU. Parliament wants to ban the use of biometric identification systems in the EU for both real-time and ex-post use (except in cases of severe crime and pre-judicial authorisation) and not only for real-time use, as proposed by the Commission. Furthermore, EU Parliament wants to ban:

- all biometric categorisation systems using sensitive characteristics (e.g., gender, race, ethnicity, citizenship status, religion, political orientation);

- predictive policing systems (based on profiling, location, or past criminal behaviour);

- emotion recognition systems (used in law enforcement, border management, workplace, and educational institutions); and

- AI systems using indiscriminate scraping of biometric data from social media or CCTV footage to create facial recognition databases.

- High-risk AI systems: While the EU Commission proposed automatically to categorise all systems in certain areas or use cases as “high-risk”, the EU Parliament proposes an additional requirement that the systems must pose a 'significant risk' to qualify as high-risk. AI systems that risk harming people's health, safety, fundamental rights, or the environment would be considered as falling within high-risk areas. In addition, AI systems used to influence voters in political campaigns and AI systems used in recommender systems displayed by social media platforms, designated as very large online platforms under the Digital Services Act, would be considered high-risk systems. Furthermore, EU Parliament imposes on those deploying a high-risk system in the EU, an obligation to carry out a fundamental rights impact assessment.

How will the EU AI Act impact the UK?

If the EU's AI Act is enacted, it will be applicable to UK entities that deploy AI systems within the EU, offer them on the EU market, or engage in any other activity governed by the AI Act. These UK organisations will have to ensure that their AI systems are compliant or face the risks of financial penalties and reputational damage.However, the AI Act could have wider implications, having a ripple effect for UK organisations that operate on the UK market only. This is because, similar to the General Data Protection Regulation (GDPR), the AI Act is likely to set a global standard in this field. This could mean two things: first, UK companies that proactively embrace and comply with the AI Act can differentiate themselves on the UK market, attracting customers who prioritise ethical and accountable AI solutions; secondly, by virtue of being a “Golden Standard”, we may see the UK’s domestic regulations evolving in a way that seeks harmonisation with the AI Act.

So far, we have noted that the UK has already set out its own approach to AI governance in a white paper published in March 2023. In essence, the Government aims to regulate AI in a way that supports innovation while protecting people’s rights and interests. Some of the principles laid out in the white paper echo the EU’s stance on AI. For example, the Government intends to create a new regulatory framework for high-risk AI systems. It also plans to require companies to conduct risk assessments before using AI tools, which makes sense if the AI tool is processing personal information given that data protection by design and default are fundamental principles of the UK GDPR. However, the white paper states that these principles will not be enforced using legislation — at least, not initially. Therefore, it is yet unclear what the uptake will be for these principles, and to what degree their voluntary nature will affect wider adoption by organisations across the UK.

Further, the EU AI Act is a pivotal piece of legislation that encourages voluntary compliance, even for companies that may not initially fall within its scope (as highlighted in Article 69, which addresses Codes of Conduct). This means that UK companies, particularly those that not only offer AI services in the EU but also harness AI technologies to deliver their services within the region, are likely to be impacted by the Act. It's crucial to bear in mind that many UK businesses have a market reach extending far beyond the UK, making the EU AI Act particularly relevant to them.

How will the EU AI Act impact UK Companies leveraging Generative AI or ‘Foundation Models’?

Owing to the growing popularity and pervasive impact of Generative AI and Large Language Models or ‘LLMs’ in 2023, a key part of the significant amendments to the EU AI Act in June 2023, was regarding the use of Generative AI.Foundation models are a class of large-scale machine learning models that serve as the basis for building a wide range of AI applications. These models are pre-trained on vast amounts of data, enabling them to learn and understand complex patterns, relationships, and structures within the data. By fine-tuning foundation models on specific tasks or domains, developers can create AI systems that exhibit remarkable capabilities in areas such as natural language processing, computer vision, and decision-making. Examples of foundation models include OpenAI’s ChatGPT, Google’s BERT and PaLM-2, to mention a few. Due to their versatility and adaptability, foundation models have become a cornerstone in the development of advanced AI applications across various industries.

It is important to bear in mind the implications of the EU AI Act for companies currently developing apps using Generative AI Large Language Models (LLMs) and similar AI technologies, such as ChatGPT, Google Bard, Anthropic's Claude, and Microsoft's Bing Chat or 'Bing AI'. These companies should be aware of the potential impact of the Act on their operations and take necessary steps to ensure compliance, even if they are not directly targeted by the legislation. By doing so, they can stay ahead of the curve and maintain a strong presence in the ever-evolving AI landscape.

Companies using these AI tools and ‘Foundation models’ to deliver their services must consider and manage risks accordingly (Article 28b) and comply with transparency obligations set out in Article 52 (1).

The EU AI Act aims to set the standard in AI safety, ethics and Responsible AI use, and also mandates transparency, and accountability. The EU AI Act (as amended in June 2023) further sets out obligations in Article 52 (3) that address the use of Generative AI.

Specifically,

“Users of an AI system that generates or manipulates text, audio or visual content that would falsely appear to be authentic or truthful and which features depictions of people appearing to say or do things they did not say or do, without their consent (‘deep fake’), shall disclose in an appropriate, timely, clear and visible manner that the content has been artificially generated or manipulated, as well as, whenever possible, the name of the natural or legal person that generated or manipulated it. Disclosure shall mean labelling the content in a way that informs that the content is inauthentic and that is clearly visible for the recipient of that content. To label the content, users shall take into account the generally acknowledged state of the art and relevant harmonised standards and specifications.” – Amendment to Article 52 (3) - subparagraph 1.

The Draft AI Act & GDPR Collaboration

The draft AI Act complements the GDPR by safeguarding fundamental rights and aligning with principles such as transparency and fairness. It acknowledges that AI often involves personal data processing, necessitating compliance with both regulations, potentially involving data protection impact assessments (DPIAs). Indeed, high-risk AI systems face stricter regulations under the draft AI Act, but GDPR requirements for personal data processing persist.The draft AI Act's provisions on AI testing also adhere to GDPR principles. Both emphasise accountability, requiring organisations to demonstrate compliance and maintain records. Substantial fines and penalties for non-compliance are shared consequences under these regulations, depending on the violation's severity. Together, they aim to ensure responsible and lawful AI development and data protection.

When navigating this ever-evolving compliance landscape, therefore, organisations will have to be aware of and continuously meet their obligations under the GDPR. For example, in the context of AI systems that involve solely automated processing and produce legal or similarly significant effects, organisations will have to demonstrate that they have put in place the right lawful basis and offer individuals the minimum required safeguards (such as the right to obtain human intervention) as well as meaningful transparency as to the processing activities involved.

Conclusion

The draft EU AI Act represents a significant regulatory development for UK organisations that intend to offer AI systems in the European market. By proactively assessing compliance requirements, ensuring data ethics, and adopting a strategic approach to AI governance, UK businesses can navigate the complexities of the EU AI Act while continuing to leverage the opportunities presented by the AI revolution. At the same time, we may see this regulatory development having some spillover effect onto the UK market, with the organisations seeking to adhere to the new “Golden Standard” set by the AI Act and, potentially, the UK lawmakers following suit to keep up with the new reality. As the global AI regulatory landscape evolves, however, organisations must not ignore their ongoing obligations under the GDPR, especially considering that many AI systems are likely to involve solely automated processing of personal data.If you have any queries or would like further information, please visit our data protection services section or contact Christopher Beveridge.

SUBSCRIBE: DATA PRIVACY INSIGHTS